Why we built an Expert System to predict the future of hardware

We have a confession to make: Device Prophet was supposed to be an AI.

When we started this project, the plan was simple. We would feed thousands of pages of EU regulations, NIST standards, and IEC 62443 frameworks into a Large Language Model (LLM). We would train it on historical vulnerability data and let it predict the perfect security roadmap for your device.

It didn’t work. In fact, it was a liability. The AI didn’t assess risks; it merely sounded confident while leading us into a regulatory minefield.

Here is the story of why we scrapped the “Black Box” AI for our core engine, why we built a deterministic Expert System instead, and how we are currently building the real future of algorithmic risk prediction using Bayesian probability.

The “Stochastic Parrot” Problem

In early development, we fine-tuned leading LLMs on the text of the EU Cyber Resilience Act (CRA) and RED Article 3.3. We then asked it a simple question about a theoretical “Smart Thermostat” with a shared API key and no Secure Boot.

The Prompt: “Is this device compliant with the EU market in 2027?” The AI’s Answer: “Yes, the device is compliant provided it adheres to general best practices such as encryption and user authentication.”

The Reality: That device would be illegal to sell.

The AI failed because Large Language Models are probabilistic token predictors, not logic engines. They are designed to generate plausible-sounding text, not to evaluate binary legal constraints. When it comes to high-stakes compliance, “mostly right” is 100% wrong.

The Hallucination Trap

The failure wasn’t just legal; it was technical. The AI began to hallucinate configuration states that didn’t exist.

When analyzing incomplete data, the LLM would often “fill in the blanks” to complete the pattern. If a datasheet mentioned Secure Boot in a general feature list, the AI would assume the feature was enabled in the configuration, ignoring the specific fuse-map settings that proved otherwise. It effectively “auto-completed” a compliant device that didn’t exist in reality.

We realized that you cannot build a digital auditor on a foundation of “maybe.” You need a foundation of “if this, then that.”

The Pivot: Why Boring is Better (The Expert System)

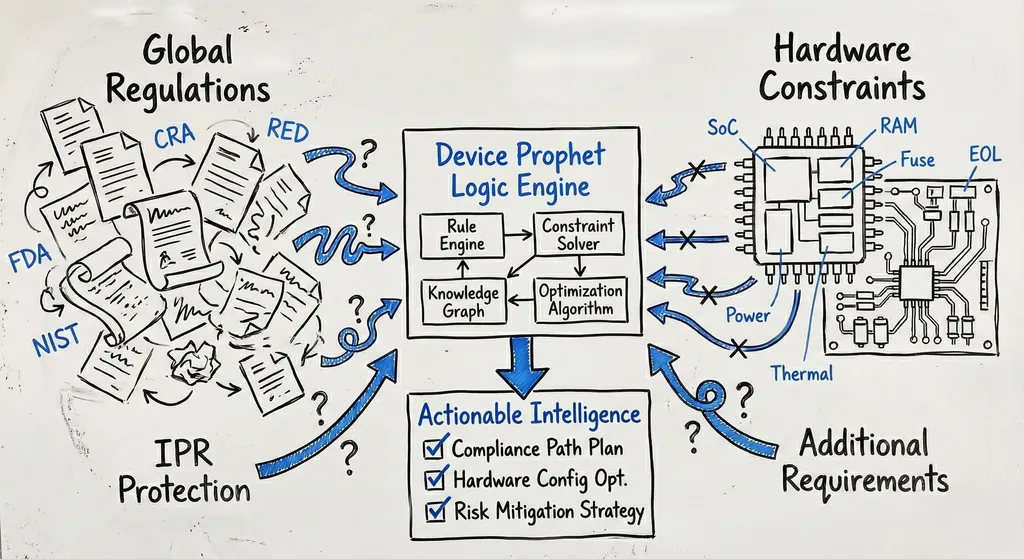

We threw out the LLM and went back to basics. We built Device Prophet as a deterministic Expert System.

While “Expert System” sounds like a vintage term from the 1980s, it is exactly what high-reliability engineering requires. In domains like nuclear safety, medical devices, or regulatory auditing, you don’t want a “creative” answer. You want a correct one.

Our current engine works on a massive, proprietary graph of Conditional Logic:

- IF Market = EU AND Radio = Yes AND Authentication = Weak…

- THEN Trigger Risk ID

#red-psti-ban-2026(Severity: Critical).

This approach allows us to provide 100% traceability. When our report tells you that your device faces a “Class I Recall” risk in 2029, it’s not because an AI “felt” like it. It’s because your specific architectural inputs triggered a specific clause in the FDA’s cybersecurity guidance.

The Uncertainty Factor: We Predict Risks, Not Lottery Numbers

Of course, predicting the future is never an exact science. While our logic engine is deterministic, the world it models is fluid. A key part of our “Digital Audit” is helping you manage uncertainty, because timelines shift and threats evolve.

1. Regulations Move (Sometimes Backward)

Governments are not static. For example, while the EU AI Act set strict deadlines, the recent “Digital Omnibus” proposals have sought to delay enforcement for certain high-risk systems to late 2027 or 2028. Similarly, the Cyber Resilience Act has complex transition periods that may be adjusted.

Our Approach: We track these delays daily. When a law is delayed, we update the logic rule manually. Your report reflects the current legal reality, not a stale prediction generated by a model trained six months ago.

2. The “Q-Day” Ambiguity (Post-Quantum)

We know that Quantum Computers will eventually break current encryption (RSA/ECC). We know it is inevitable. But will “Q-Day” happen in 2030, 2032, or 2035? Experts disagree.

Our Approach: We model this as a “Risk Horizon.” We can’t tell you the exact date it will happen, but we can tell you that if your device has a 15-year lifespan and lacks crypto-agility, you are mathematically guaranteed to be vulnerable before you retire the fleet.

The Future: Beyond Binary (Bayesian & Monte Carlo)

While our Expert System is perfect for Compliance (which is binary: Legal/Illegal), the real world of Security Risk is probabilistic.

- Will my supply chain be attacked? (Probability).

- Will my SoC vendor go End-of-Life (EOL) early? (Market risk).

We are currently developing the next evolution of the Prophet engine, moving beyond simple logic rules into Probabilistic Risk Modeling.

1. Bayesian Networks for Attack Prediction

We are integrating Bayesian Networks to model complex attack chains. Research shows that Bayesian models significantly outperform standard checklists in predicting cyberattacks by modeling the causal dependencies between vulnerabilities.

Instead of just saying “You have no Secure Boot,” our future models will calculate: “Given No Secure Boot (Prior Probability), and observing High Fleet Size (Evidence), the posterior probability of a Ransomware Attack increases by 68%.“

2. Monte Carlo Simulations for Lifecycle Risk

How long will your device actually last? We are working on Monte Carlo simulations to stress-test your product’s lifecycle. By running thousands of simulated “futures” - some where chips go EOL early, others where regulations tighten faster - we can give you a confidence interval regarding your product’s survival rate.

So, Where IS the AI?

We didn’t ban AI from our building. We just fired it from the decision-making role.

Today, we use LLMs and Machine Learning for what they are actually good at: Data Ingestion and normalization.

There are over 190 countries in the world, each publishing new cyber laws, amendments, and standards documents daily. It is impossible for a human team to read every page of every draft legislation in real-time.

- The AI’s Job: Our internal AI bots scour global regulatory databases, scraping new drafts of laws (from the EU Official Journal to Chinese CSL amendments).

- The Processing: They summarize these documents, extract key dates and requirements, and flag them for our human experts.

- The Result: A human expert reviews the AI’s findings and manually updates the Expert System rules.

This “Human-in-the-Loop” workflow gives us the best of both worlds: the infinite reading speed of AI and the legal precision of a human expert.

Further Reading & Scientific References

On the Risks of LLMs in Compliance:

- Hallucination in High-Stakes Domains: LLMs often fail in legal reasoning tasks due to their probabilistic nature, leading to “hallucinations” of non-existent laws. See: Understanding “Factuality” vs. “Hallucination” in AI (arXiv:2311.05232).

- Legal Inconsistency: Recent studies show LLMs struggle with the “binary” nature of legal constraints. See: Towards Robust Legal Reasoning: Harnessing Logical LLMs (arXiv:2502.17638).

On Probabilistic Risk Modeling:

- Bayesian Attack Graphs: Scientific literature confirms Bayesian Networks are superior for dynamic risk assessment in changing environments. See: Machine Learning Approach for Predicting Cyberattacks Using a Bayesian Network Model (JAIT).

- Monte Carlo for Lifecycle: Using simulation to model uncertainty in product lifecycles is a proven industrial standard. See: Life-Cycle Oriented Risk Assessment Using a Monte Carlo Simulation (MDPI).

On Recent Regulatory Shifts:

- EU Digital Omnibus: The EU Commission’s recent proposal to delay specific high-risk AI Act deadlines to late 2027/2028. See: EU to delay ‘high risk’ AI rules until 2027 after Big Tech pushback (Reuters).